Enrollment dashboards keep improving, while completion curves stay largely the same.

Across online programs in higher education, access continues to expand, and platform usage rises, creating activity signals that look healthy at a glance. When teams examine engagement more closely, interaction remains shallow, participation tapers off after the first few weeks of a term, and feedback cycles slow as programs grow.

Discussion activity increases without much real exchange; assessment attempts reflect exposure more than understanding, and synchronous attendance fades once the early momentum wears off.

We’ve seen this pattern trace back to systems designed for efficient delivery, rather than for sustaining engagement over time.

This piece looks at the structural forces shaping student engagement in online higher education, including interaction design, motivation mechanics, applied learning approaches, and blended and hybrid course models.

Why Student Engagement Declines in Online Learning Programs Over Time

The decline rarely announces itself. In the opening weeks, participation looks steady enough; assignments come in when they’re supposed to, and live sessions keep their numbers, which makes it easy to assume things are holding. Most teams do not flag an issue early on. The signals still look acceptable.

Later in the term, the change becomes easier to sense than to measure. Responses lose depth; interaction rarely moves beyond the question itself, and participation stabilizes the minimum needed to move forward.

We’ve seen this tied to how many online programs are structured around availability instead of continuity. Content is easy to access, and timelines are clear, but most activities sit on their own. Because one assignment rarely depends on the last and very little carries forward, students begin to treat the work the same way, showing up to handle what’s due and then stepping away until something else demands their attention.

Most systems quietly reinforce this pattern. Platforms are built to track completion more easily than effort or understanding, which pulls attention toward what can be counted.

Over time, engagement shifts to align with what the system recognizes, not because students lack motivation or commitment, but because the design choices prioritize consistency and scale over sustained involvement.

The next layer shows up in how interaction itself is designed, which is often where engagement either starts to deepen or continues to thin out.

How Interaction Design Shapes Student Engagement in Online Courses

Interaction design quietly shapes where effort goes once students enter a course, and in many online programs, it sits alongside content rather than functioning as part of the learning itself, which makes participation feel optional. When interaction isn’t necessary to move forward, engagement becomes conditional, with students participating when it’s visible and stepping back when it isn’t.

Common Interaction Design Patterns That Limit Engagement

Some of the constraints show up at the activity level.

-

Discussion prompts are used even when dialogue isn’t essential to the learning goal. Response formats stay the same from week to week, even as the work gets more complex. Students are rarely asked to make choices that carry forward or shape what they’ll be asked to do next.

Other limits come from timing and feedback.

-

Feedback often arrives after the moment when it could have made a difference. Instructor responses acknowledge that work was submitted but stop short of pressing on reasoning or approach. Peer contributions disappear once the activity closes, which quietly signals that they don’t matter much beyond completion.

There are also cues built into the structure of the course itself.

-

Interaction isn’t tied to progression, so skipping it doesn’t change what happens next. Activities don’t accumulate toward anything visible. Engagement doesn’t alter the path of the course, which makes it easier to treat interaction as optional, even when it’s encouraged.

While each of these choices is defensible on its own, together they narrow engagement to compliance, with students participating because activity is visible rather than because it advances learning.

When interaction is designed as a mechanism instead of an add-on, the pattern shifts, as tasks call for judgment, formats change with the work, and feedback shapes what comes next, making engagement harder to bypass because it affects progression. This is often the layer where teams like MITR Learning and Media focus, aligning interaction with learning intent rather than surface activity.

Whether this holds over time depends on motivation, which becomes the next structural layer.

Common Interaction Design Gaps in Online Higher Education

-

The same interaction formats get reused week after week, even as learning goals shift, and the work becomes more complex.

-

Discussion boards end up carrying most of the interaction load, whether or not real dialogue is needed to support learning.

-

Prompts lean toward recall or opinion, with few asking students to make decisions or apply ideas in a concrete way.

-

Response expectations stay loose, which makes surface-level participation feel sufficient.

-

Feedback often arrives after the activity has closed, missing the moment when it could have shaped understanding.

-

Instructor responses tend to confirm that work was completed, rather than pressing on reasoning or approach.

-

Peer contributions fade once an activity ends and are rarely referenced again, signaling that they carry little weight.

-

Interaction isn’t tied to progression, so skipping it doesn’t change what happens next.

-

Activities don’t build toward a visible outcome, which makes each one feel self-contained.

-

Engagement is tracked as presence and completion, not the quality of contribution.

How Motivation Works in Online Learning Environments in Higher Education

Motivation in online learning is often treated as an individual trait, but it functions more like a system response. Students react to how effort is organized, how progress is signaled, and whether their actions lead anywhere. When those cues are weak, motivation fades, even when the content itself is solid.

In many courses, progress is defined by completion rather than momentum. Tasks sit in isolation; feedback confirms submission without shaping what comes next, and assessment timing interrupts learning flow. Over time, motivation narrows to managing deadlines instead of staying engaged.

Motivation tends to hold when effort changes what follows, with outcomes influencing progression and feedback carrying forward in ways that help attention stay steady, rather than leaving motivation fragile in the absence of those structures.

Why Applied Learning Supports Motivation in Online Courses

Applied learning supports motivation because it changes the logic of effort. In many online courses, students put in work first and only understand its value later, usually through grades or completion markers. Applied tasks of surface relevance while the work is happening.

Motivation = Perceived relevance × Visible consequence ÷ Effort uncertainty

When relevance is clear, and outcomes lead somewhere concrete, effort feels justified. Applied activities ask students to decide, produce, or respond to realistic conditions, even in small ways. The task explains why it matters. Without that structure, motivation leans on discipline. With it, motivation is designed into the work.

The Role of Applied Learning in Sustaining Student Engagement Online

Motivation can get students started. Applied learning is what keeps them there.

When tasks ask students to apply concepts, make decisions, or produce work that carries forward, engagement becomes harder to drop because effort has consequences that extend beyond a single activity.

Applied learning creates continuity in online courses:

-

Tasks connect across weeks

-

Outputs accumulate instead of resetting

-

Earlier decisions shape later work

Students aren’t responding to isolated prompts. They’re working inside a structure that holds.

This structure also reduces ambiguity, since learners can see what they’re building toward and why sustained effort matters, rather than relying on reminders and deadlines to stay involved as the course moves on.

Blended and Hybrid Learning Models and Their Impact on Student Engagement

Blended and hybrid models shape engagement less through format and more through coordination. When online and in-person components are loosely connected, students experience them as parallel tracks, which causes engagement to fragment and effort to flow toward whatever feels most immediate, often assessments or live sessions, while other elements become secondary.

This pattern is easier to see when looking at how the two modalities align in practice.

scroll right to read more

| Design Alignment | Engagement Pattern |

|---|---|

| Online and in-person components repeat the same content | Students prioritize one modality and skim the other |

| Live sessions are not referenced in online work | Attendance drops after early sessions |

| Online tasks prepare for in-person activity | Participation increases across both formats |

| In-person discussions shape later online assignments | Engagement carries forward between sessions |

| Each modality has a defined role in progression | Effort distributes more evenly over time |

Engagement improves when each modality serves a distinct purpose within a shared sequence. Problems emerge when blended delivery increases workload without increasing dependency. Strong models reduce redundancy, clarify intent, and rely on coordination rather than volume to sustain engagement.

Design Choices That Shape Engagement Over Time

Student engagement in online and blended learning tends to weaken when design decisions are treated as isolated choices. Interaction, motivation, applied learning, and delivery models reinforce one another, whether that’s planned or not. Programs that sustain engagement over time usually approach these elements as a system, rather than expecting individual courses or instructors to compensate for structural gaps.

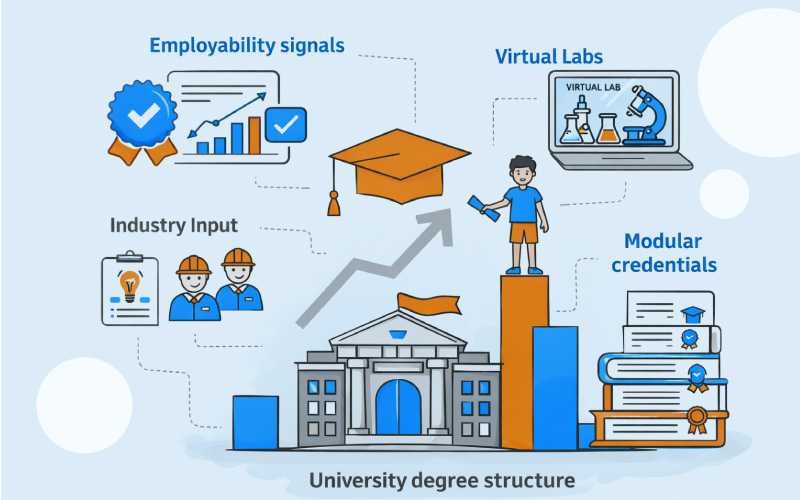

This is the level where MITR Learning and Media’s higher education services align learning design, media, and program structure, so engagement is supported by the system itself.

Talk through engagement challenges with MITR Learning and Media to clarify where design constraints are limiting engagement and where change is feasible.

FAQ's

Why is accessibility essential to STEM education for students with special needs?

Accessibility to STEM eLearning means that all students (of both genders and with special needs) get to be partakers of learning programs. It's a step towards eliminating educational inequalities and fostering multiverse innovation.

In STEM education, what are some common problems encountered by students with special needs?

Some common issues are course format that is not complex, non-adapted labs and visuals, insufficient assistive technologies, and no customized learning resources. Besides this, systemic issues such as learning materials that are not inclusive, and teachers who are not trained.

How can accessibility be improved in STEM eLearning through Universal Design for Learning (UDL)?

Through flexible teaching and assessment methods, UDL improves accessibility in STEM content. Also, UDL allows learners to access and engage content in multiple ways and demonstrate understanding of content.

What are effective multisensory learning strategies for accessible STEM education?

Examples of multisensory learning strategies in accessible STEM include when students use graphs with alt-text, auditory descriptions of course materials, tactile models for visual learners through touch, captioned videos for auditory learners, and interactive simulations to allow boys and girls choice in how they have access to physical, visual, auditory, video and written content representation.

Identify the assistive technologies required for providing accessible STEM material?

In order to provide access to STEM material, technologies like screen readers, specially designed input app for mathematics, braille displays, accessible graphing calculators are required.

How can STEM educators approach designing assessments for students with special needs?

To create content for students with special needs, tactics such as creating adaptive learning pathways in more than one format, oral and project assessments and multiway feedback will prove to be beneficial.

What is the role of schools and policymakers in supporting accessible STEM education?

Educational institutions should focus on educating trainers and support staff, also they can invest in assistive technology, and work towards curricular policies.

Can you share examples of successful accessible STEM education initiatives?

Initiatives like PhET Interactive Simulations, Khan Academy accessible learning resources, Labster virtual laboratory simulations, and Girls Who Code’s outreach are examples of effective practice.

How can Mitr Media assist in creating accessible STEM educational content?

Mitr Media is focused on designing and building inclusive e-learning platforms and multimedia materials with accessibility standards in mind so that STEM material is usable by all learners at different levels of need.

What value does partner with Mitr Media bring to institutions aiming for inclusive STEM education?

Mitr Media has expertise in implementing assistive technology, enacting Universal Design for Learning, and providing ongoing support to transformation organizations, enabling their STEM curriculum into an accessible and interesting learning experience.