The question usually comes up in a leadership or board discussion that was meant to focus on growth or performance. Someone asks whether the organization feels confident about workforce readiness for the year ahead. Another leader asks why teams that completed the same training still make different decisions in similar situations. The room pauses, not because learning did not happen, but because explaining its impact feels harder than expected.

Most enterprises recognize this moment. Corporate learning platforms remain active, and corporate learning management systems continue to show strong participation across roles and regions. AI in corporate learning has increased speed and coverage. On dashboards, learning appears to be controlled. In decision forums, leaders struggle to explain how AI in corporate learning translates into consistent execution.

That struggle matters. Boards are not reviewing the learning activity for reassurance. They are deciding whether the business can rely on its people to execute strategy, manage risk, and make sound decisions as complexity increases.

Organizations rarely arrive here suddenly. Learning keeps pace operationally while roles, priorities, and expectations change underneath it. AI-powered platforms accelerate delivery, but they do not automatically create capability or governance. Mitr learning and media works with enterprises when this gap becomes visible, helping leaders realign AI in learning and development around decision clarity and accountability before uncertainty hardens into risk.

The enterprise learning problem leaders encounter after AI adoption

Most of these challenges surface not because enterprise learning lacks scale or investment, but because learning systems are asked to support complex decisions without being designed for decision consistency. Leaders typically encounter a consistent set of issues after introducing AI into learning systems:

AI and learning strategies prioritize speed and scale over decision support

AI tools for corporate training focus on faster content production, personalization, and automated updates, which improve efficiency but do not address how decisions change in real work.

Learning expands faster than alignment

As roles evolve and policies change, training content often remains live without reflecting current expectations, creating gaps between learning and work.

AI accelerates misalignment when applied too early

AI in learning and development amplifies activity when teams automate before defining which decisions learning must support.

Capability becomes an assumption rather than a designed outcome

In this environment, AI for learning increases participation while judgment, consistency, and accountability remain underdeveloped.

This pattern appears most clearly after enterprises introduce AI in corporate learning without redefining how decisions should change.

Why faster learning output feels like progress until scrutiny begins

AI-powered platforms make learning easier to distribute and faster to consume. Learners receive answers quickly and access content in more formats than before. For corporate learning and development teams under pressure to deliver at scale, this efficiency creates visible momentum.

However, speed driven by AI in corporate learning does not strengthen judgment by default. When AI and learning initiatives prioritize content delivery over structured practice, learners spend less time thinking through consequences. They recognize information but struggle to apply it consistently. Over time, learning feels modern yet fragile.

Early warning signs appear quietly. Managers interpret the same guidance differently across regions. Teams trained on identical material make inconsistent decisions. Leaders notice variation without clear ownership. At this stage, dashboards still look healthy, but confidence begins to weaken.

Why traditional learning metrics fail leaders and auditors

Most corporate learning management systems rely on metrics designed to measure delivery efficiency rather than enterprise readiness. While these indicators help teams track activity, they consistently fall short during leadership reviews and audits. These gaps explain why AI in corporate learning often looks successful operationally but weak under scrutiny.

This lack of confidence comes up repeatedly in real conversations with HR and learning leaders. Even in recent global skills research, only a small share said they believe their workforce is genuinely equipped to meet today’s business goals, despite sustained investment in learning. This gap explains why traditional learning metrics fail to reassure leaders when scrutiny increases.

Engagement and personalization do not prove decision quality

Completion, engagement, and personalization show that learners accessed relevant content. They do not show whether learners can apply guidance correctly in real situations. Enterprises often see high engagement, while decision quality varies across teams. Without evidence of how people interpret and act on learning, these metrics provide weak signals of readiness.

Standard metrics fail to show alignment and consistency

Activity metrics rarely demonstrate whether training reflects current policies or produces consistent decisions across roles and regions. During audits and leadership reviews, teams struggle to show traceability between learning, policy updates, and real-world behavior. Dashboards track delivery efficiency, not alignment or consistency.

These limitations explain why confidence erodes even when AI for learning initiatives appear successful on paper.

What effective A-enabled learning looks like before tools enter the conversation

Enterprises that succeed with AI and learning take a different approach. They define learning problems before introducing technology. They clarify which decisions must improve before expanding content. They treat practice as a core design requirement rather than an optional enhancement.

This shift becomes clearer when enterprises move beyond experimentation and focus on the practical use cases of AI in learning that directly support decision-making, consistency, and governance at scale.

Effective AI for learning strategies share three characteristics. They start with decision clarity. They design structured practice into learning experiences. They embed governance before scale. These foundations allow AI tools for corporate training to support learning rather than distort it.

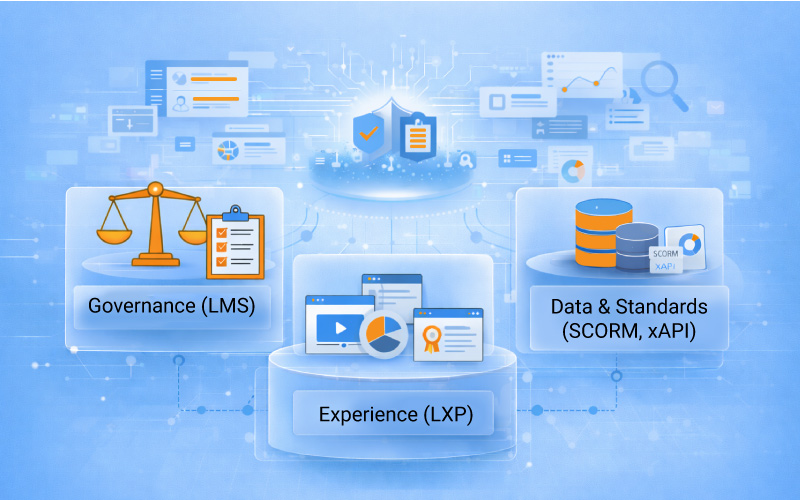

Mitr’s capability-first framework for AI in enterprise learning

In this framework, AI supports capability building, not rapid content expansion. Judgment, practice, and defensibility come before automation, enabling scale without compromising enterprise control.

This approach reflects how artificial intelligence in designing learning delivers value when it supports judgment, practice, and governance rather than accelerating content production alone.

Phase one: decision and capability diagnosis

We begin by identifying the specific decisions learners must make differently to reduce risk or improve performance. This step focuses on real work scenarios, current policies, and role expectations. Teams map capability gaps instead of content gaps, ensuring that AI for learning supports relevant behavior rather than outdated assumptions.

Phase two: AI-augmented practice design

Once decision requirements are clear, we apply AI tools for corporate training to generate structured scenarios, decision paths, and realistic variations aligned to enterprise risk and performance contexts. Learners practice judgment repeatedly rather than consuming static information. Human learning designers maintain oversight to protect learning integrity and relevance.

This approach allows AI and learning to work together to strengthen thinking instead of replacing it.

Phase three: governance and outcome validation

We embed governance into the learning system from the start. Corporate learning management systems track evidence tied to decision behavior, not just completions. Review mechanisms ensure learning remains aligned as roles, policies, and risks evolve. This structure allows ai-powered platforms to operate at scale while maintaining defensibility.

How capability-first AI learning plays out in enterprise contexts

In practice, capability-first AI learning changes how enterprises design training for specific business situations rather than expanding content libraries. The following examples show how this approach reshapes compliance training and leadership readiness by focusing on decision quality, consistency, and defensible outcomes.

These outcomes depend not only on learning design choices, but also on disciplined solution design & development that embeds practice, governance, and evidence into delivery.

Compliance and policy training

In compliance training, organizations often use AI to generate faster modules when policies change. Audits continue to raise questions because learners interpret rules differently. A capability-first approach reframes training around decision scenarios tied directly to policy risk. Enterprises reduce interpretation variance and strengthen audit defensibility.

Leadership and role readiness programs

In leadership readiness programs, many corporate learning platforms focus on expanding content libraries. Behavior change remains limited. When organizations redesign learning around decision simulations and structured reflection, readiness becomes visible. Promotion discussions gain clarity. Confidence improves.

These outcomes show how AI for learning delivers value when applied to the right problem.

The measurable benefits enterprises experience with Mitr’s framework

Results tend to improve when organizations stop treating AI as a shortcut and start using it to strengthen capability. This direction is well supported by learning science and audit research, but the real difference shows up in how consistently it is applied. What changes outcomes is aligning AI initiatives to a learning strategy that holds decision quality and accountability steady as learning scales.

Stronger decision consistency across comparable roles

Studies in regulated industries show that scenario-based practice reduces interpretation variance by 30 to 40 percent compared to content-only training approaches.

Improved audit readiness and evidence quality

Organizations that define learning evidence before rollout demonstrate clearer traceability between training, policy updates, and learner decisions, leading to faster audit validation cycles.

Higher confidence in workforce readiness reporting

Research highlights increased executive confidence when corporate learning and development teams report readiness based on observed decision behavior rather than inferred participation.

Lower rework and sustained learning quality at scale

Enterprises that diagnose capability before scaling AI reduce rework caused by misaligned pilots and frequent redesigns. Learning remains stable as roles, policies, and expectations evolve, rather than requiring constant rebuilds.

Clearer accountability for learning outcomes

By linking learning to decision ownership, enterprises reduce ambiguity when outcomes fall short and strengthen collaboration between learning, risk, and business leaders.

Three questions to ask before expanding AI in your learning ecosystem

Before investing further in AI tools for corporate training, enterprises should ask three questions:

- Do we know which decisions learning must improve?

- Does AI support practice or replace thinking?

- Can we explain how learning outcomes remain governed as roles and risks change?

If these answers remain unclear, scaling AI for learning will scale the problem.

Frequently asked questions about AI in corporate learning

1. Will adopting AI automatically improve learning outcomes?

No. AI improves speed, personalization, and scale, but it does not automatically improve judgment or decision-making. Enterprises see better outcomes only when they first define which decisions must change, and then design learning around repeated, realistic practice. AI adds value when it scales well-designed capability, not when it replaces learning design discipline.

2. Why do AI learning initiatives show high engagement but weak business impact?

Because engagement is easier to generate than impact. Most AI-driven initiatives optimize how content is delivered, not how decisions are made. Learners click, complete, and respond well to personalization. But when they return to real situations, behavior often looks the same as before. Without structured practice and clear expectations around decision-making, activity increases while performance barely moves.

3. How should leaders measure learning effectiveness beyond completion rates?

Leaders should measure observable decision behavior instead of activity. Effective measures include decision consistency across comparable roles, performance in scenario-based assessments, and alignment between training content and current policies. These indicators provide stronger evidence of readiness than completion or engagement metrics alone.

4. What are the most common mistakes enterprises make when using AI for corporate learning?

Most issues start small. AI is introduced to move faster, content grows quickly, and early pilots look successful. Over time, it becomes clear that learning goals were never fully defined and governance was light. What remains is a large volume of material that is hard to defend when outcomes are questioned.

5. How can organizations make AI-enabled learning defensible during audits and reviews?

Defensibility comes from evidence and traceability. Learning needs to be mapped to specific decisions, connected to the latest approved policies, and supported by realistic scenarios that capture learner responses. Human review and approval records remain essential. AI can assist with scenario creation and analysis, but governance ensures the learning stays aligned, current, and accountable.

6. How long does it take to see a measurable impact from AI in learning?

It depends on how narrowly the effort is scoped. When AI is applied to a specific decision or role, organizations often begin to notice changes within a few months. Broader, enterprise-wide impact usually takes longer, since governance, capability mapping, and review cycles need time to settle. Teams that start with higher-risk or higher-impact decisions tend to see progress sooner.

How Mitr learning and media support decision-ready enterprise learning

We work with enterprises where learning activity remains high, but confidence in outcomes has weakened. Engagements typically begin with a decision and capability diagnosis, followed by a learning design assessment and a structured roadmap. To support this work at scale, we apply BrinX.ai, our A-powered learning solution, to help organizations design, govern, and evolve learning in ways that strengthen judgment, consistency, and defensibility.

AI in corporate learning succeeds when enterprises design capability first. Technology then accelerates what already works instead of amplifying what does not.

Explore how we apply capability-first AI learning in enterprise environments and start a focused discussion on creating decision-ready learning.