If you look at your learning dashboard right now, it probably looks fine. Role-based learning paths are in place. Employees receive content recommendations that match their function. Completion rates move steadily upward each quarter across your corporate LMS or online LMS environment. On the surface, the system appears to be organized and active.

But let me ask you something most dashboards cannot answer clearly.

If a regulator walked in tomorrow, could you confidently show that training reduced operational risk? If a business unit expanded into a new market, could you identify which teams are truly ready and which are not? If a manager struggled with judgment calls, could you trace that gap back to a missing capability rather than a missing course?

This is where many enterprise leaders pause.

The reports look clean. The activity numbers look stable. Yet when the conversation shifts from “who completed what” to “who can actually do what,” the clarity fades. Personalization has improved access to content, but it has not necessarily improved readiness. Learning feels active, sometimes even impressive, yet it does not always feel decisive.

At Mitr Learning and Media, we see this pattern repeatedly across large organizations. Teams invest in better content libraries and smarter recommendations, and systems become good at suggesting the next course. What they do not always build is a clear structure for defining and tracking capability in real time. Skills sit inside job descriptions and learning paths without strong measurement, so leaders rely on completion data because that is the signal available.

Over time, this creates a quiet gap between learning delivery and workforce confidence. Leaders continue to fund training; employees continue to complete programs, and dashboards continue to show progress. Still, when someone asks whether learning improves judgment, reduces risk, or strengthens execution, the answer feels less precise than it should.

That gap is not a content problem. It is a structural one.

In this blog, we will look at what changes when personalization moves from courses to clearly defined skills. We will focus on structure rather than tools and stay grounded in enterprise reality. By the end, you should have a clearer view of how to connect learning activity to real capability and make decisions with greater confidence.

What Is a Learning Management System Responsible for in an Enterprise?

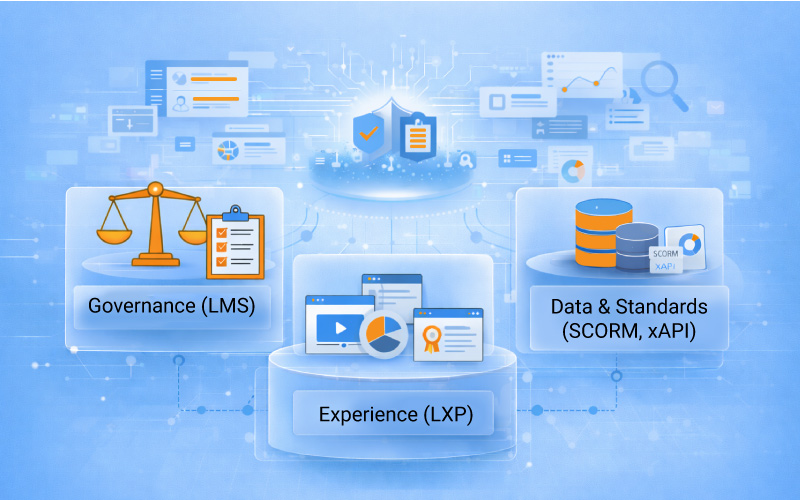

To choose the right learning management system, define the architecture first. Most enterprise learning environments include three layers.

1. The Control Layer: The Learning Management System

When leadership asks for proof, the training management system is typically where the answer lives. It shows how training was assigned, who followed through, how they performed, and whether credentials remain current. Over time, it becomes the organization’s reference point during reviews because it preserves a traceable record of learning activity.

In regulated environments, the LMS is not an optional infrastructure. It is what proves that required training was completed, certifications were current, and access was managed by role and region. Without that structure, governance becomes difficult to demonstrate.

If compliance and governance matter, this layer must remain stable and defensible.

2. The Experience Layer: LXP and Discovery Tools

The role of an LXP is usually about making learning more discoverable. It surfaces recommendations, supports search, and helps employees navigate skills and content in a more flexible way. That can increase learning engagement.

That said, it does not take over the governance responsibilities of the learning management systems. Completion history, certification status, and compliance reporting still sit in the system of record. For most large enterprises, both platforms operate side by side. The system maintains structure and accountability, while the LXP improves how learning feels and how easily it is discovered.

3. The Data and Standards Layer

In some environments, organizations also rely on a learning content management system to create and version content, but without alignment to the learning data structure, reporting gaps can still appear. This layer includes SCORM, xAPI, APIs, and identity management systems. It determines:

- What data the system captures

- How content moves between platforms

- Whether data remains portable

- How integrations function

This layer sets the reporting ceiling. If the system captures limited data, analytics remain limited regardless of dashboard design.

Why Enterprise LMS Projects Fail

These projects do not fail because the software lacks features. They fail because teams choose a platform before defining how learning data, governance, and integration must work at scale.

The Feature Trap

Teams compare top LMS companies or review lists of top LMS platforms and broader learning management solutions based on:

- Course authoring tools

- Gamification

- Mobile access

- UI design

- Subscription cost

These features matter, but they do not determine long-term viability. Data structure, standards support, and integration maturity matter more at enterprise scale.

Architectural Debt

When organizations prioritize speed over structure, they accumulate architectural debt. They select an online learning management system that meets current needs but restricts:

- Advanced reporting

- Skills alignment

- Integration flexibility

- Data export

Correcting these limitations later requires content rebuilds, data migration projects, and workflow redesign.

Real-World Example: When Completion Data Was Not Enough

In one global financial services organization, a new learning management system was introduced to centralize compliance training across regions. At first, it did what it was meant to do. Completion rates were visible. The pass and fail status were easy to report. The pressure came later, during regulatory review. Leaders were asked to show evidence of capability development by role, not just course completion. That level of reporting had never been designed into the system. The organization eventually had to restructure learning paths and revisit hundreds of existing courses. To obtain more useful insights, the organization added integrations after the initial launch.

The Two-Year Rebuild

Many enterprises replace or heavily modify their learning platform within two to three years. The original system cannot scale to meet new expectations.

Avoiding this cycle requires architectural clarity from the start.

LMS vs LXP: What Is the Difference and Do Enterprises Need Both?

Is an LXP a Replacement for an LMS?

No. An LXP enhances learning, discovery, and personalization. It does not serve as the official system of record for compliance and certification.

Enterprises that replace the LMS entirely with an LXP struggle to prove completion status during audits.

Where Should Completion Data Live?

Completion data and certification history are commonly retained in the primary learning system because that is where reporting and audit evidence are managed. The LXP enhances usability and exploration, but it does not replace the need for a centralized system of record.

SCORM vs xAPI: What Is the Real Difference?

The SCORM vs xAPI decision is not about which standard sounds more modern. It is about what kind of learning data you want to capture and whether your learning management system can support the level of insight your organization will need three years from now.

What SCORM Tracks

SCORM primarily tracks whether a learner launched a course, completed it, passed or failed the assessment, and how much time they spent inside the module.

For structured compliance-driven LMS for employee training use cases, especially in traditional e learning LMS environments, this level of tracking often meets regulatory requirements and supports standardized packaging with reliable completion records.

What xAPI Tracks

xAPI captures more granular data, including:

- Specific learner actions

- Progress within simulations

- Learning events outside the portals

- Task completion in connected systems

If your organization plans to measure behavior change, track learning across tools, or align training with performance systems, xAPI provides greater flexibility.

What Happens During Migration?

The complexity of migration often shows up only after planning begins. Large libraries of SCORM content may need updates. xAPI functionality might not be fully supported in the target platform. Historical data can also prove difficult to map if the new system uses a different structure.

Tools such as SCORM Cloud support validation during content testing, but they do not replace a well-designed architecture.

How Data Standards in Your Learning Management System Affect Reporting and Skills Visibility

Detailed analytics cannot be reconstructed later if the underlying data was never captured. When a learning management system records only completions and scores, future planning conversations tend to rely on a narrow view of capability.

As organizations expand into workforce skilling, internal mobility, and capability mapping, learning data needs to connect with skills frameworks. Without consistent data standards, information spreads across platforms, and reporting becomes harder to consolidate.

Before selecting an enterprise learning system, define:

- What reports leadership requires

- What audit evidence regulators expect

- What skills data talent teams need

Choose standards and platforms that support those outcomes.

Enterprise LMS Integration Requirements: HRIS, SSO, and API Considerations

Many vendors highlight how many users their online learning management system supports. User count alone does not define scalability.

In large organizations, learning portals often serve different regions or departments, which makes integration and identity management even more critical.

HRIS Integration

Automated user provisioning reduces manual work and prevents compliance gaps. When employees join, change roles, or exit, the system must update automatically.

Manual account management at enterprise scale introduces risk and administrative cost.

Single Sign-On and Identity Management

SSO improves adoption and reduces password fatigue. It also strengthens security controls. Enterprises must confirm that the LMS software integrates cleanly with identity providers.

API Maturity and Ecosystem Integration

An enterprise learning management system should connect with:

- HR systems

- Talent management platforms

- Performance systems

- Collaboration tools

Weak APIs limit integration and require custom development. Over time, these limitations increase maintenance costs.

Governance defines whether the corporate LMS truly protects the organization.

Version Control and Certification Logic

The system must track which version of a course a learner has completed. It must manage certification renewals and maintain clear historical records.

Audit Traceability

Time-stamped logs and preserved records become especially important when scrutiny increases. During regulatory reviews, leadership is expected to provide clear and consistent documentation, and those records often determine how defensible the learning process appears.

Data Privacy and Regional Compliance

An enterprise LMS must align with regional regulations such as GDPR. Data residency requirements may vary by country. Organizations operating globally must verify where data resides and how the vendor manages privacy controls.

Governance cannot function as an afterthought. It must shape platform selection from the beginning

LMS Pricing, Vendor Lock-In, and Total Cost of Ownership

LMS pricing often appears straightforward. Many vendors package their platforms as comprehensive learning management solutions, which can make comparison difficult without architectural clarity.

Subscription fees, user tiers, and implementation packages look clear on paper. The real cost emerges over time.

How Vendor Lock-In Occurs

Lock-in usually develops over time rather than through a single contract clause. Reporting models are designed around one platform. Integrations rely on restricted APIs. Custom configurations become difficult to replicate elsewhere. Even content formats can make portability harder than anticipated. These dependencies are not obvious during implementation. They surface later, especially when organizations attempt to streamline systems or shift vendors.

What to Ask Before Signing

- Can we export all learning records in a standard format?

- Can we migrate content without rebuilding it?

- How does the system handle consolidation after acquisition?

- What integration options remain available if we switch vendors?

Total Cost Beyond Subscription Fees

The subscription price is only one part of the equation. Over time, costs show up in other places. Content may need to be rebuilt. Data has to be migrated carefully. Integrations often require redesign. Administrators and managers need time to adjust to new workflows.

When comparing LMS platforms, it helps to look beyond license pricing and consider the operational effort the decision will carry over the long term.

A Practical Framework for Choosing the Right LMS for Employee Training

Enterprise buyers benefit from a structured decision process.

Step 1: Define Compliance Exposure

Clarify regulatory obligations. Identify required reports and certification tracking logic. Ensure that the learning management system can provide compliance consistently.

Step 2: Define Data and Reporting Requirements

Determine what data leadership needs to make workforce decisions. Align standards such as SCORM or xAPI with those reporting goals.

Step 3: Map Integration Dependencies

List all systems that must connect with the LMS, including HRIS, identity management, and talent platforms. Validate API capabilities early.

Step 4: Align with Skills and Workforce Strategy

If the organization plans to invest in workforce skilling or internal mobility, confirm that learning management platforms support structured skills data.

Step 5: Evaluate LMS Platforms Last

After defining architecture, compare LMS software options and other learning management solutions against your defined requirements. Assess how each vendor supports your defined requirements rather than adjusting requirements to match the tool.

Making a Confident Enterprise Decision

Choosing a learning management system shapes how an organization governs training, measures capability, and scales learning across regions. The decision affects compliance, reporting, integration, and workforce learning strategy for years.

Treating LMS selection as architecture design reduces risk and protects long-term investment.

A learning management software decision has implications that extend beyond training delivery. It influences governance, reporting clarity, and how learning operates across regions. Over time, those structural decisions affect compliance and workforce visibility.

This approach also helps organizations evaluate learning management solutions based on structure rather than surface features. Defining governance and data expectations upfront makes the evaluation process more realistic.

At Mitr Learning and Media, we often work with organizations that want this level of structure before committing to a platform. The goal is to align systems with workforce strategy in a way that supports measurable, defensible outcomes.

Book a consultation to evaluate whether your current learning management system supports long-term scalability, governance, and skills visibility.

Frequently asked questions about AI in corporate learning

1. Will adopting AI automatically improve learning outcomes?

No. AI improves speed, personalization, and scale, but it does not automatically improve judgment or decision-making. Enterprises see better outcomes only when they first define which decisions must change, and then design learning around repeated, realistic practice. AI adds value when it scales well-designed capability, not when it replaces learning design discipline.

2. Why do AI learning initiatives show high engagement but weak business impact?

Because engagement is easier to generate than impact. Most AI-driven initiatives optimize how content is delivered, not how decisions are made. Learners click, complete, and respond well to personalization. But when they return to real situations, behavior often looks the same as before. Without structured practice and clear expectations around decision-making, activity increases while performance barely moves.

3. How should leaders measure learning effectiveness beyond completion rates?

Leaders should measure observable decision behavior instead of activity. Effective measures include decision consistency across comparable roles, performance in scenario-based assessments, and alignment between training content and current policies. These indicators provide stronger evidence of readiness than completion or engagement metrics alone.

4. What are the most common mistakes enterprises make when using AI for corporate learning?

Most issues start small. AI is introduced to move faster, content grows quickly, and early pilots look successful. Over time, it becomes clear that learning goals were never fully defined and governance was light. What remains is a large volume of material that is hard to defend when outcomes are questioned.

5. How can organizations make AI-enabled learning defensible during audits and reviews?

Defensibility comes from evidence and traceability. Learning needs to be mapped to specific decisions, connected to the latest approved policies, and supported by realistic scenarios that capture learner responses. Human review and approval records remain essential. AI can assist with scenario creation and analysis, but governance ensures the learning stays aligned, current, and accountable.

6. How long does it take to see a measurable impact from AI in learning?

It depends on how narrowly the effort is scoped. When AI is applied to a specific decision or role, organizations often begin to notice changes within a few months. Broader, enterprise-wide impact usually takes longer, since governance, capability mapping, and review cycles need time to settle. Teams that start with higher-risk or higher-impact decisions tend to see progress sooner.

How Mitr learning and media support decision-ready enterprise learning

We work with enterprises where learning activity remains high, but confidence in outcomes has weakened. Engagements typically begin with a decision and capability diagnosis, followed by a learning design assessment and a structured roadmap. To support this work at scale, we apply BrinX.ai, our A-powered learning solution, to help organizations design, govern, and evolve learning in ways that strengthen judgment, consistency, and defensibility.

AI in corporate learning succeeds when enterprises design capability first. Technology then accelerates what already works instead of amplifying what does not.

Explore how we apply capability-first AI learning in enterprise environments and start a focused discussion on creating decision-ready learning.